A new business model powered by the synergy of GenAI and terminology

AI and terminology make a strong team. While AI amplifies the efficiency of numerous aspects of terminology work, terminology makes generative AI (GenAI) output more reliable, reduces hallucinations, and ensures compliance with corporate language.

This new synergy is revolutionizing the industry. It is transforming the work of terminologists, enhancing the benefits of terminology services, and streamlining the entire terminology business model. As AI reduces the cost of terminology management, the benefits of terminology data in a corporate environment continue to grow. As a result, terminology suddenly becomes a valuable asset, turning from a cost driver into a key benefit factor.

Optimizing GenAI output: less hallucination, own corporate language, more consistency

GenAI has two major weaknesses that make its commercial use unpredictable, unreliable, and risky: hallucinations and terminological inconsistency. Hallucinations are factually incorrect results based on seemingly random content generation when the model did not have enough contextual information. Linguistic or terminological inconsistencies in the generated output occur because of the vastly different data on which the models were trained. Let's look at how these are generally addressed, and why terminology can be a game changer here.

Prompt engineering

Probably the most discussed option is prompt engineering which, according to Dina Genkina in IEEE Spectrum, is "finding a clever way to phrase [a] query to a Large Language model (LLM) ... to get the best results"1. This method allows you to add context and "knowledge" to the AI output through the prompts, thus steering the model response by providing instructions describing the task and how to solve it. Prompt engineering can either take place in the actual user message, or better yet, in the system prompt that primes the model. Without going into detail, prompt engineering has some practical shortcomings, the major disadvantage being the inherent token limitation of AI models.

While this is improving and also varies depending on the model used, this constraint does not allow for large amounts of additional information to be included in the prompt, such as an entire document or an exported termbase. And even if the limitation for tokens may one day disappear altogether, this will not solve another challenge: models have been shown not only to become slower with more context but also tend to "miss" or "forget" important information in large amounts of text ("Lost in the Middle" Liu et al. 2024; "Ruler" Hsieh et al. 2024). Furthermore, the inherent lack of transparency can make it difficult to predict and control the results. Finally, while LLM providers charge less for inputs than for outputs, cost is also an important factor.

RAG - Retrieval Augmented Generation

Because of these limitations, RAG (Retrieval Augmented Generation) has emerged as the leading strategy for adding context and "knowledge" to Generative AI (GenAI).

With RAG, it is possible to upload existing information in advance, such as documents, or in our specific case, even entire termbase exports in formats like TBX or CSV. The data is chopped into predefined random chunks (e.g., of 600 tokens each) and a special semantic model (a so-called embedding model) then calculates a mathematical representation of the "meaning" for each chunk and stores it in a vector database in a process known as "embedding". You can think of it as chunks of text with a different meaning floating in space, each represented by a dot (or vector, to be precise). The assumption is that the closer two dots are to each other, the more similar their semantic meaning.

In a RAG process, the user's query or prompt is first "embedded" into the same vector space as the uploaded documents. The model is now able to compare the similarity between the query and stored chunks, and attempt to retrieve (R) the most semantically relevant content. These chunks are then added to the user prompt, thus "augmenting" (A) its "context". Finally, the retrieval augmented prompt is used to generate (G) the output from the GenAI model.

This approach is extremely useful for many use cases:

- Documents can be uploaded, "embedded", and then used for queries. Example: "How can I do xyz?"

- The same vector database as your content can be used, such as to generate definitions in terminology work based on your information.

- Terminology databases can be uploaded and used when generating or translating documents.

- The use of taxonomies or knowledge graphs (concept maps) for RAG applications is also particularly exciting. Through prompting, hybrid search methods, or even special fine-tuning, LLMs can learn to traverse (i.e., navigate) this data and use it in context.

- Looking further ahead, RAG opens the door to reasoning engines, recommender systems, auto-classification of content, and more.

However, RAG also has some serious disadvantages, especially for our use case of incorporating terminology into GenAI output:

- The entire RAG process relies on approximate semantic similarity, which lacks the determinism that terminologists expect. In terminology, meanings are explicitly defined, like 'word A means abc, word B means xyz', whereas embeddings represent meaning in an abstract way, conditioned by all the tokens in a chunk.

- The embedding process is hard to control, as it can break data into chunks at potentially inconvenient points, such as in the middle of a terminological concept.

- Once the data is embedded, it is difficult to filter the data or use only the relevant terms from the termbase.

- Content generation with RAG is slower due to the very intensive retrieval process.

- RAG is based on pre-embedded data, so the terminology is not available in real time.

Given that termbases are normally prescriptive, deterministic, and already very precise, the RAG approach might not yield the expected results.

Terminology Augmented Generation (TAG): a new approach for reliable GenAI output

We are therefore developing a new method of using terminology in GenAI: TAG, or Terminology Augmented Generation.

TAG combines several key elements:

- Classic deterministic and precise terminology searches in a termbase in contrast to probabilistic retrieval in vectorized data repositories

- Exact retrieval and filtering of the required data, given that terminology databases are very well-structured and comply with principles such as concept orientation, term autonomy, and data elementality.

- Precise output of the needed results in easy-to-process formats that can be generated for that very purpose, such as prose-like text or lightly structured terminology formats such as Markdown or JSON.

- API access to terminology in real time

So, what can terminologists and terminology data contribute to a corporate GenAI strategy?

Classic search and filters in termbases

Terminologists are familiar with the classic search functions of termbases, which include various search algorithms such as string-based searches (e.g., wildcard, fuzzy, full-text search, etc.), and filter options based on category, usage status, or subject area (i.e., terminological data). Furthermore, some systems support terminology recognition in natural language texts, recognizing terminology stored in the termbase, even if it appears as a morphological variant in the text.

However, AI teams are often unfamiliar with these search options and do not realize their full potential. When conducting terminology searches, they typically expect a "word list" as the output, but instead receive entire term entries containing the full set of metadata, synonyms, and languages, which are difficult for them to parse. In addition, the complex structure of termbase schemas featuring numerous fields and several synonyms can be daunting for AI teams to navigate. One of the cornerstones of terminology work—the separation into entry, language, and term level—is difficult for technical AI specialists to comprehend, especially if identical fields are used at different levels.

Terminologists generally are also well-versed in working with various metadata-based filters to retrieve just the content they need, including cross-references, relationships, and taxonomies. We simply know how to find exactly what we are looking for. Communicating this important capability to those driving AI initiatives is vitally important.

Terminology formats for TAG

LLMs can handle standard termbase export formats, such as TBX or CSV, relatively well out of the box. Here it is usually sufficient to explain the structure to the LLM, e.g., using XML elements as in TBX. However, the many tags are unnecessary tokens that cause both costs and problems by "distracting" the model during retrieval, leading to mistakes in the output.

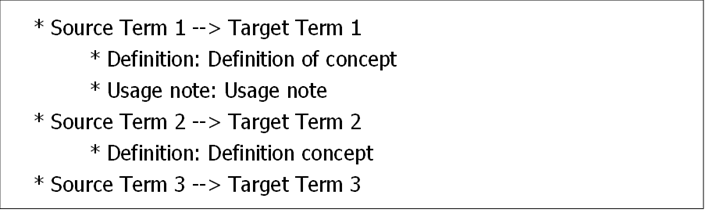

In contrast, output in a simple markup language such as Markdown, or serialization formats such as YAML or JSON, can be simpler and more precise. These formats involve fewer tokens and are also more widely comprehensible. For example, a simple retrieval for three terms for translation purposes could return something like this:

LLMs immediately understand this, especially with a one-shot explanation. As they can also effectively handle formats that are very close to natural language, the context can be expressed in prose. In the example below, the TAG call returns important information for the ambiguous German term "Welle", providing the necessary context for accurate understanding and processing:

Welle -> wave (Definition: Movement of the ocean surface)

Welle -> shaft (Definition: Coupling element between a ship's engine and the rotor)

Or even simpler:

Translate "Welle" as "wave" if it refers to the movement of the ocean surface.

API access in real time

Modern GenAI platforms can call functions from other applications, such as a termbase search, using Application Programming Interfaces (APIs), thus eliminating the need for exports and imports.

In OpenAI, for instance, it is possible to define, store, and use a "terminology lookup" API call during the content generation process, thereby bypassing the traditional RAG approach that relies on accessing a vector database. Instead, a termbase search that returns only the required data in a precise, immediately usable, structured or even prose form is executed, thereby providing a more accurate and efficient outcome.

TAG: The best of both worlds

TAG therefore combines the best of both worlds, merging terminological expertise in retrieving precisely the required data in a simple format with the IT knowledge needed to integrate API calls directly into GenAI applications.

Examples for TAG implementation could be:

- Parsing content to automatically identify and fix incorrect or unknown terminology, ensuring the output is compliant with your corporate language.

- Retrieving precise context information or definitions for terms used in a prompt or generated output. Even retrieving related concept information is possible by traversing relationships in the termbase.

- Improving or verifying translation output by retrieving the source language terminology and providing both correct and incorrect translations in the target language, along with definitions or usage information. This enables the model to accurately translate even sentences with ambiguous words like homonyms, for example.

- Enabling interactive user experiences with the information stored in a termbase (chat with the termbase)

Our research has identified the following advantages of TAG compared to the traditional RAG methods:

- TAG is more efficient in terms of processing speed and token consumption, making it faster and cheaper than RAG.

- TAG accesses specialized terminology in real time, providing up-to-date and specific information.

- TAG delivers precise yet flexible search results to the LLM to meet specific needs rather than "probably" relevant data chunks.

- TAG is simple to implement and easy for users to understand, making it a more accessible and user-friendly solution.

Implications for terminology management

So, what does all this mean for terminology work? The good news is that a well-maintained termbase already contains all the necessary data for TAG, making it a valuable resource. In addition, using the termbase for GenAI provides an excellent argument as to why it makes sense to meticulously maintain the termbase with metadata such as classifications (for filtering or content selection) or definitions, examples, context information, but also term type, genus, part of speech, and similar information. As GenAI can directly process and leverage this metadata, it becomes an essential component of effective terminology management. By maintaining a high-quality termbase, organizations can unlock the full potential of GenAI and TAG, leading to improved efficiency, accuracy, and decision-making.

The following data categories have proven to be particularly useful for GenAI in our experiments:

- Definition (including source): to resolve ambiguities and provide clarity

- Examples and context sentences: as a guide to implementation in generated outputs, ensuring correct usage

- Subject area or other classifications: enable filtering and providing the LLM with contextual information for disambiguation purposes

- Usage status: to distinguish between preferred and deprecated terms, ensuring that only approved terms are used

- Usage notes: to differentiate usage according to context, providing nuanced guidance on term application

- Grammatical information: especially important for ambiguous terms, such as English loan words in other languages, to ensure correct grammatical usage

Conclusion

Terminology as the basis for efficient, clear, and precise "human" communication also proves to be an excellent guardrail for optimizing generative AI applications. An existing terminology database can significantly improve AI output, both in terms of language and content. This, in turn, significantly increases the value of terminology within the organization, highlighting its importance as a strategic asset that can drive improved communication, productivity, and decision-making.

TAG is a very lightweight, precise, and extremely flexible method of augmenting AI prompts for content generation. Its implementation can be easily collaborative, leveraging the strengths of both terminology and IT teams:

- Terminologists bring their expertise in terminology methods, understanding of the termbase structure, and knowledge to extract exactly the required data.

- IT professionals know how to access the termbase technology through real-time API calls.

By combining these skills, organizations can efficiently implement TAG, enabling high-quality, accurate, and context-specific content to generated by AI models.

At the same time, AI also has the potential to reduce the effort required to create and maintain terminology. As a result, the business model for terminology is greatly improved by simultaneously promising lower costs and higher value. As GenAI continues to evolve and become more prevalent, having a well-maintained terminology system will become increasingly important. It is therefore essential that organizations prioritize terminology management now so that GenAI has the foundation it needs to function effectively.

1 Dina Genkina, AI Prompt Engineering Is Dead - Long live AI prompt engineering, in: IEEE Spectrum.

More about AI and Terminology

- Find out more about our AI Training Course: Terminology Augmented Generation - How to Teach LLMs Your Corporate Language

- Read our blog article: AI and Terminology

- Watch AI work like a charm in our webinar recording: Terminology extraction and buildup using TermCatch and Quickterm